At the time that you want to optimize a web page, regardless of the marketing strategy that we follow, we must be aware of what are the guidelines required by Google.

Not everything is based on reaching the first rankings and getting backlinks, to satisfy Google plays a lot in favor of a web page since the main objective of Google is to give its users access to accurate, unique and written information by “experts””. That’s why it continuously modifies and improves its algorithms so that each website gets the exposure it deserves.

Unfortunately, that’s where our concern appears: We can be penalized. Sometimes these penalties are deserved if the technique used to optimize a website is illegal or does not meet the requirements of Google. However, even if this is your case and you know you are doing something wrong, everything has a solution.

What is a Google penalty?

A sanction from Google is reflected in a negative impact on the search ranking of a website because it does not comply with the updates of Google algorithms and / or manual review.

The penalty can be a byproduct of an algorithm update or an intentional penalty at the hands of the negative SEO / Black Hat SEO.

If the traffic of your website has suffered a sudden drop and therefore the ranking in the ranking has been diminished, it is probably because you have received a sanction from Google.

Recognize a sanction or penalty from Google

Nobody likes not to be like for Google, therefore we must follow the requirements of their algorithms to the letter, and for this we must start by putting the reasons why you can be penalized by Google. These are eleven of them.

Before starting … What is Black Hat SEO or Negative SEO?

Negative SEO or Black Hat SEO is the set of unethical practices or techniques to improve the positioning of your site or sabotage the positioning of a competitor’s site in search engines.

Among the most popular SEO black hat techniques we find:

- Copy content from other sites

- Buy links to your website

- Linking excessively from spam sites

- Hide words or texts from the user

- Having low quality content with unnatural use of keywords

etc.

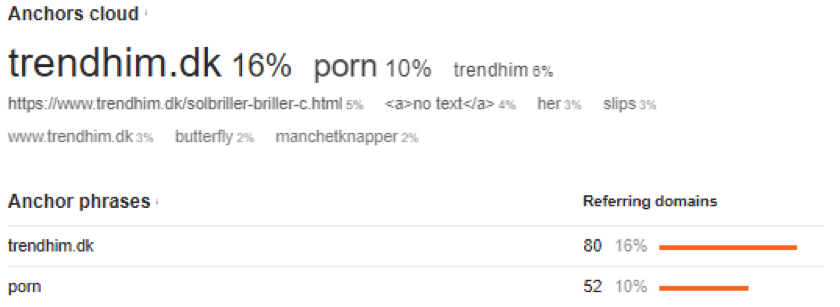

One of the best known cases is Trendhim, Danish company of male accessories that a year ago was the victim of a negative SEO attack, where more than 8500 links linked to their website under the term “porn”. This is the most used practice in Negative SEO, to make Google believe that a page is pornographic so that it lowered positions in the rankings, and therefore, decrease its sales and increase those of a competitor.

1) Google Penguin Penalties: Buy links

Among all the owners of a web page, how many there will be who have not bought a link during the life of your website? A Few.

We all believe that buying links is fine, that there is nothing wrong behind it since Google can never know if we paid for a link or not. But are we right? The answer is no. Buying links is the same as manipulating our link profile (backlink profile), which can be understood as negative SEO but vice versa, since in this case we are buying links to our website voluntarily with anchor texts controlled by us.

Google has taken strict measures to fight against these actions, damaging the presence and prestige of each website, and it is so since 2012, the “penguin” – Google Penguin – is responsible for removing sites that have unnatural links, from low authority sites such as directories and with anchor text optimization.

2) Google Panda Penalties: Duplicate content

The Google Panda Penalties appeared in the year 2011 and have to do with everything related to Content.

Once again, what is the goal of Google? Offer the best content to users. Is duplicate content the best content? Clearly not. The more duplicate content there is in a worse website, the Google website will fall. Google will find the website with duplicate content that is not very useful for users and will transmit it to you.

Plagiarism is not the only thing that Google takes into account when analyzing the content of a website, the quality of the content plays an equal or even more important role. You have to try to be unique and innovative when publishing content. Why invest time and effort in something that users can find in other websites?

These are the main reasons that make the Pagerank of a website fall, if the content is not good, the search engines will have a bad image of the web. It is important to ensure that the content is unique and well written; for this you can use tools such as Copyscape or CopyGator.

Duplicating the content does not only refer to tracing and copying something literally, another one of the Google Panda penalties, and of which few are aware is the content spinning: it’s just taking something written in one way, and rewriting it so that it sounds different but says the same thing. This although many do not create it, it is also published content, and is penalized by Google.

3) Google Panda: Low quality content

The quality of the content of your website is an essential aspect to obtain the highest positions in the search engines and generate natural backlinks. The more information your website provides to readers, the more quality it will have.

When it comes to including content on your website you have to keep in mind that it should be as informative as possible. You have to include all possible points so that readers do not have to consult other websites to find more information.

If your website contains quality content you will show your readers that you are an expert in your sector, and that will make them return to your website to find information. And the same will happen for Google. Google will be aware of the quality of your content and the effort you put in offering the best to your readers, and since you share the same goal, you will have no problem in benefiting your rankings in the search engines.

Try to understand that there are thousands of websites and content available in each Internet niche. However, Google can only rank the top 10 web pages of any topic on the first page of search engine results. The rest of the web pages are buried in the other search results.

As a general rule, we can say that our friend Panda, does not like pages with less than 300 words.

4) Cloaking: Distorted content

The cloaking technique consists in distorting the content accessed by the search engines when they read the site, creating a layer content and making search engines believe that your website deals with different content through deceptive redirects.

This technique makes Google’s trackers to access content different from that accessed by users and is harshly sanctioned by Google when it is detected.

5) Keyword-Stuffing: Excessive use of keywords

Again, this is one of the most common techniques in black hat SEO. Google analyzes the keywords present in the website and acts accordingly. If the number of keywords is unusual, then the web will be penalized since a high keyword density is a sign that the content is of poor quality.

Technically it is called: keyword-stuffing, and what Google seeks to avoid these practices is that the content is useful and valuable for the user, with a natural wording that allows reading and understanding. Thus, if the text uses synonyms and related words it will be much more valuable to Google than if it repeats meaningless keywords permanently.

Thus, if the text uses synonyms and related words it will be much more valuable to Google than if it repeats meaningless keywords permanently. Five years ago everything was based on the number of links, and not on the quality. This has now changed, and Google rewards more the quality of the received backlinks than the amount of them.

Taking this to the level of keywords, until a few years ago, a technique widely used by SEO experts to position a page was to use the same anchor text for all the links to make Google see what we wanted to position ourselves. However, since the Google Penguin update, the excessive use of an anchor text is highly penalized.

That is why it is advisable to avoid using the same keyword in the anchor text. To follow more closely the anchor texts and the link profile, it is advisable to use tools such as Aherfs.

6) Google Penguin: Exchange of links

The exchange of links is to place a link to a website on your website, and in return, the other website places a link on your website on your website.

Currently, link exchanges are not as effective as they used to be. Search engines have become smarter and these links have become detectable. By participating in link exchange, you run the risk of being penalized or even banned by the search engines. Again, this can be understood as a technique to manipulate the Pagerank.

7) Slow speed – Slow response time

Humans tend to wait between three or four seconds for a page to load, if it does not load in that period of time, we do not return to that web. Something similar happens to Google.

Webs that take too long to load tend to have lower ratings. This is usually due to include non-optimized images, numerous advertisements, etc. These websites annoy both the user and the search engines and, consequently, harm your SEO.

Nothing proves more our patience than a slow web. Currently, nobody has time to wait a few minutes for a website to load the content. The slowness of a web page will automatically cause users to leave the web and search for other faster websites. To remedy this, add caching or a CDN can be used.

A tool that you can use to verify if the loading speed of your page is appropriate is the W3C, where loading the URL of your page, provides information on the elements to optimize to accelerate it and reach the standards required by Google.

8) Broken links – Error 404

Google is always attentive to ensure that the content it offers its users is always up-to-date, that’s why it takes into account all the errors of each web, even the most hidden. These hidden errors can be for example 404 errors or broken links. This error conveys the inability of the web to provide a user-oriented experience.

If the links are outdated, Google will assume that you do not care about the user experience. To remedy this it is important to make a periodic review of the links included within the web.

In the Google Console, you can find tracking errors that Google has found on your website and work on them to correct them. Another highly recommended tool for this task is Screaming Frog, in which, you just have to paste the URL of your site and it will return the status of each one of the pages that compose it. If you find a 404, you should identify the cause of it, and delete it so that it can be visualized again.

9) Spam comments

Most websites have an automatic system for detecting unwanted comments, but there are still comments that escape, so it is important to be aware at all times of the comments that the web receives, if they are spam the web will be damaged.

Always review the comments of users, which do not link to sites not related to the content that you have shared, that do not include excessive amount of links, etc. If so, you can delete it and / or mark it as spam, so that Google sees that you are concerned about the content of your site and its update.

10) Deceptive Structuring of Pages: On the use of H1 titles

The carefully organized content helps in SEO, that nobody doubts. There is no better content than a well structured and visible content for users, but that does not mean that we have to fill our content with H1 tags.

The header tag, or the <h1> tag in HTML, will usually be the title of a publication or other text emphasized on the page. Usually, it will be the largest text that stands out, therefore the one that will say more about the content.

The excessive use of H1 tags can make Google believe that what we are trying to do is include keywords in the content and believe that we are manipulating their algorithms. Not to mention that it may fall into another penalty that we have already discussed, the excessive use of keywords.

As a general rule, each page of your site must have only one H1.

11) Lack of optimization on mobile devices

All of us who have a web page have realized that most of our users access our website by mobile. There are fewer and fewer people looking from a computer.

In the past, we only worried that our page was optimized for computers, but now Google is giving importance to mobile optimization. This is a fact, users prefer to use mobile phones, after all, it is easier and we have it always at hand.

That is why if the web pages are not optimized for mobile devices, Google will interpret it as a sign that the web does not care about the users’ experience, and again the web will be penalized.

After analyzing these 11 of the many reasons that lead Google to penalize a website, the rest is in our hands. You have to be aware of the requirements of Google algorithms at all times, if you want to classify a website in conditions.

The goal of Google is not to penalize web pages, but to offer the best experience and benefit all those who offer quality content, well written, without plagiarizing. Definitely, all the websites that collaborate to make the goal of Google a reality, will be benefited, in one way or another.

________________

About the Author

Sara López Alaguero is Marketing Manager at Trendhim. She loves writing, fashion and communications. Sara studies Computer Engineering at VIA UC and is an expert in Online Marketing, SEO and Linkbuilding. In her spare time she works as a copywriter for websites and newspapers, as well as writing a personal diary where she narrates her experiences and hobbies: sara-lopez.com. She has a passion for computer science, and devotes his free time to investigate in this field and learn as much as possible.

Sara López Alaguero is Marketing Manager at Trendhim. She loves writing, fashion and communications. Sara studies Computer Engineering at VIA UC and is an expert in Online Marketing, SEO and Linkbuilding. In her spare time she works as a copywriter for websites and newspapers, as well as writing a personal diary where she narrates her experiences and hobbies: sara-lopez.com. She has a passion for computer science, and devotes his free time to investigate in this field and learn as much as possible.